TMW #139 | The long tail of generative AI

Welcome to The Martech Weekly, where every week I review some of the most interesting ideas, research, and latest news. I look to where the industry is going and what you should be paying attention to.

👋 Get TMW every Sunday

TMW is the fastest and easiest way to stay ahead of the Martech industry. Sign up to get the full version delivered every Sunday for this and every TMW, along with an invite to the TMW community. Learn more here.

The long tail of generative AI

My kids have a sneaky habit of keeping all their art and craft projects on a shelf near our dining room table. The other night the stack of glittery bits of cardboard, half-finished drawings, and clumps of Play-Dough tipped over, causing quite the mess, right in the middle of dinner.

When I tried to throw some of it away, my daughter had a meltdown, wanting to keep all of her creations for as long as possible. This is my mental picture of another kind of arts and crafts accumulation - generative AI.

We’re now firmly nine months in since OpenAI’s ChatGPT became the catalyst for a new category of generative technology, spawning countless audio, video, imagery, and text-based AI startups.

Now, a lot of the dust has settled and large swaths of the Martech industry have announced their bolt-on / screw-in / add-on features. It’s not only the tech though. We’ve had numerous AI dooms-dayers posting their end-of-the-world treaties calling to pause the technology; Sam Altman doing his political tour to sway regulation into OpenAI’s court; we’ve had oh-so-many reports on the marketing and consumer adoption of generative AI; and countless podcasts, blogs, and newsletters talk about, dissect, argue and opine about this technology’s disruptive potential.

John Thornhill, Innovation Editor at the Financial Times summarizes our moment well:

“Even by the breathless standards of previous technology hype cycles, the generative artificial intelligence enthusiasts have been hyperventilating hard.”

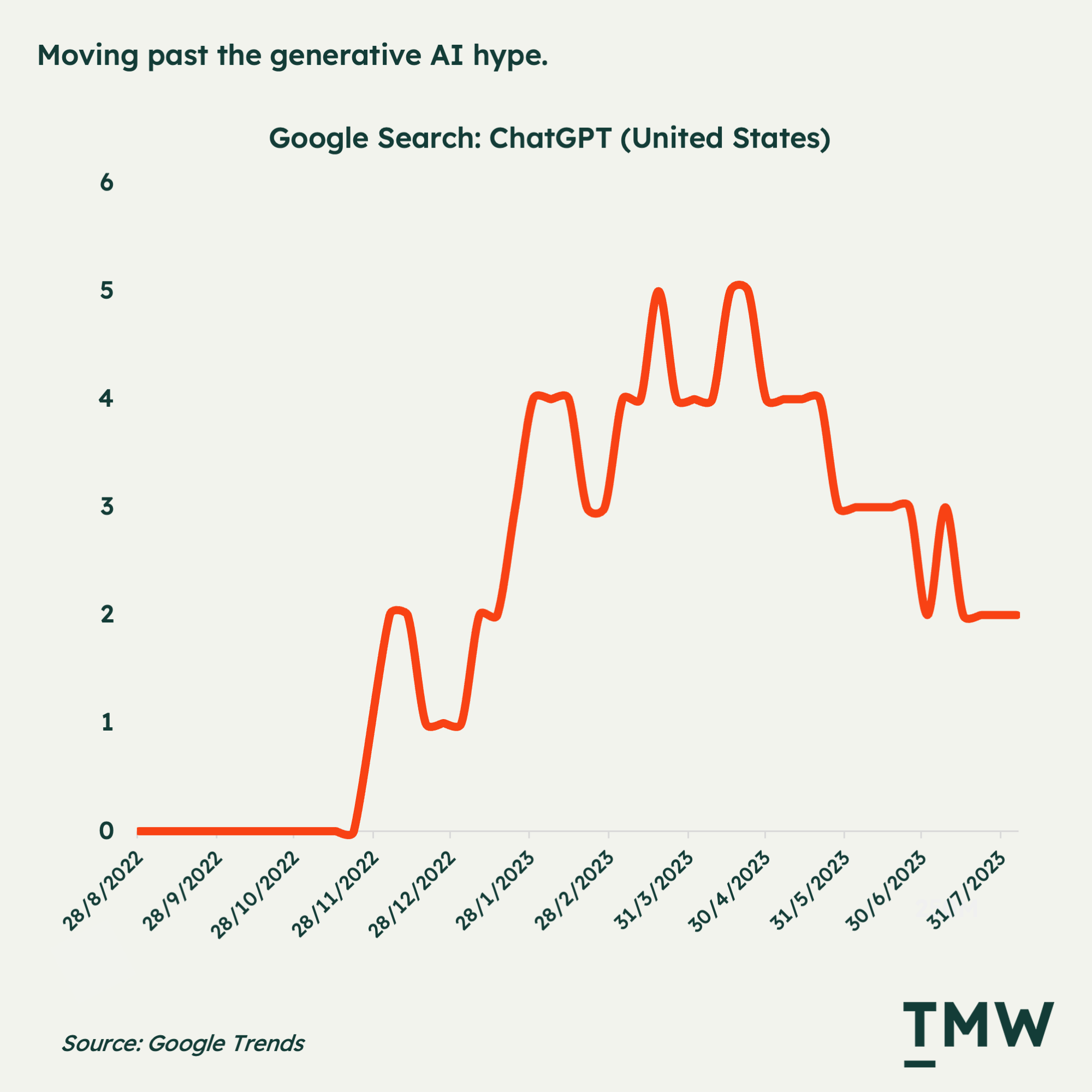

All of this is happening while OpenAI starts to slow down in user growth, web traffic and general interest from the public, as the cost to manage ChatGPT grows to $700k a day.

Clearly, the hype is petering out as generative AI finds its place in the broader technology economy. So what happens next?

An exploding market

Despite the cooling interest, we are still in the middle of a huge investor hype cycle for generative AI, with more than $14 billion poured into new Gen AI startups in the US in 2023 alone. And while Martech investments are currently slowing down, AI companies are getting a lot of that growth capital instead. In 2023 investments in generative AI startups have increased by a huge 464%, which is one of the biggest year-on-year increases in startup investing ever seen.

With more than 300 new generative AI-focused startups launched this year and 13 companies reaching a billion-dollar valuation, speculation of the commercial opportunity in generative AI is at an all-time high.

And now there are a lot of new companies – flush with cash – looking to find use cases or problems to solve with their new technology. In situations like this, it reminds me of Abraham Maslow’s famous quote: “If the only tool you have is a hammer, you tend to see every problem as a nail.” But what if the tool isn’t a hammer, but could be a screwdriver, ratchet, or drill as well? There’s a lot of tech-forward, problem last thinking in the generative AI category because the technology is so malleable, you can make it into literally anything.

And because these new founders are now able to tap into generative AI infrastructure, what we’re seeing is the explosion of all kinds of apps everywhere, with applications for every industry from tech to finance, healthcare to energy; there seems to be a lot of GPT-4 shaped nails to hammer down.

In early 2022, before generative AI blew up, I sketched out a framework for how marketers might want to use AI products. It looked like this:

In 2023 it now looks like this:

And that’s just the notable companies. There are still hundreds of new startups building generative AI solutions. As you can see, there was a lot more in the marketing side of AI applications when the generative category started to gain traction.

Clearly, we’re now inundated by new products. But how are those solutions organized, and what does it look like? One way to think about it is that generative AI technology is not a new category, but a substrate for other categories to emerge.

Case in point - there are now generative AI products in everything:

- ChatGPT is a new personalization channel for consumers

- AI used to create and optimize ad creatives and campaigns

- AI sitting on top of digital asset management workflows

- Adobe’s generative tools in its suite of products to augment design tasks

- Tools that can use text prompts to build apps and software

- Data agents and co-pilots that work on top of data infrastructure to write code, analyze data, organize and pattern-match variables and setup integrations

- Tools to scan the contents of videos to provide descriptions and summaries and AI to edit down those videos into social clips in seconds and create new kinds of AI-generated movies

- AI products to allow customers to interact with smart agents to get faster and smarter answers

- Tools to create thousands of creative variations for large-scale marketing campaigns

These are all just a small sampling of some of the more interesting ideas to hit the market in 2023, as generative AI continues to permeate a lot of our existing workflows, tasks, and business needs. But all of this sounds great until you discover that generative AI’s future is by no means certain.

Trust issues

While there’s been a smattering of interesting ideas, we’re starting to see the long tail problems play out of unleashing generative technology onto the internet. What we have on our hands is a tool that can generate images, content, build apps, troubleshoot problems, create videos, and modify existing content at a few keystrokes. This is generating a series of interesting and challenging second-order effects.

And the challenges are as broad as the application of the technology. Across IP infringement, misinformation, the hallucination problems with the technology, changes to the landscape of consumer data collection, the devaluation of content and creativity, and the ongoing degradation of the platforms themselves, there are a lot of challenges to overcome if the technology has a shot as reaching the mass consumer.

Regular people need to trust the technology and how it serves them, but we’re also a long way from that happening. According to the Kearney Consumer Research Institute, of the 50% of consumers surveyed who had tried a generative AI technology, 44% of them said they don’t trust it.

There’s a reason for this, and you can see it with the sheer volume of pure AI-generated garbage flooding social media. A Europol study suggests that the vast majority of online content will soon be generated by AI. On sites like Instagram and Twitter, there’s an increasing volume of fake content churning through their algorithms, making it even harder for you to trust that what you’re seeing is real. We’ve had spots of this with Pope in a Coat and the now-famous Tom Cruise deepfake; only the tip of the iceberg of the potential harm this can create.

What it does mean is that marketers are now having to respond to a different content environment. If consumers start to trust what they see less, your content will be no different. Supermassive social networks, as powerful as they are in distributing content and scaling awareness and engagement, betray the subtle problem that a few bad actors can still wreck it for everyone.

NewsGuard, a misinformation data collection company, suggests that since the release of ChatGPT and Google’s BARD, there’s been little progress in reducing the amount of false information propagated by the AI tools. Both tools confirmed as truthful at least 80 of 100 false stories it was fed.

If the current trajectory of generative content is anything to go by, then in a couple of years, most public social networks, search platforms, and e-commerce websites will be overrun by AI-generated garbage, deep fakes and nonsense. It’s not the best outlook. That’s why working on tools to root out, downgrade and suppress generative AI content in these public channels is becoming increasingly important. The only problem? Even OpenAI’s own tools can’t detect generated content from its own’s products. There’s a billion-dollar opportunity in figuring the detection problem alone.

Sure, that problem is likely to get solved sooner rather than later, but as both the technology to create generated content and the tech to detect it feeds off each other, it does seem as though there’s no way out. Especially now that Meta is open-sourcing their lightweight version of an LLM, it’s going to become even easier for anyone to generate endless streams of content at scale. With generative AI, detection and creation are diametrically reinforcing.

But it’s for this reason that Richard Hanania thinks that the growing volume of synthetic content will actually increase the value proposition for existing, trusted news outlets and brands:

“I’m more inclined to think cheap and convincing deep fakes will have the opposite impact, increasing the power and prestige of established institutions and discrediting most threats to their power. At the same time, the new technology will have negative effects on an impressionable minority of the public, that is, the same group of people that already falls for fake news.”

I can see this being a very likely outcome; the second-order effect of generative AI is a greater divide between premium (handmade) content and the open sewer of artificially generated content. This will make life harder for content creators with small followings, but it might actually equalize a lot of the damage done to media with the advent of the internet by disrupting legacy media’s powers of distribution.

But that’s not the only second-order effect. There are now two class action lawsuits open against OpenAI – one for privacy violations and the other for copyright infringement. The claims are over $3 billion, making both a serious challenge to the legal viability of generative AI tools, which is still an open question for regulators.

Copyright and IP protection is a huge problem for generative AI. Even attempts by artists to have themselves and their artworks removed from image-generation AI platforms cannot fully achieve this. That’s why Adobe is now offering protections against copyright infringement as a result of using the company’s new generative AI suite of products. Even the Grammys have decided not to recognize works created in part or in full by generative AI.

If generative AI can escape the legal systems in place for IP protection, it might have a chance at living up to the hype that it will really be a paradigm shift and usher in a step change for digital marketing. Before this happens, the general-public need to trust that the technology is both effective and good for society. Right now it’s not looking good.

Bite your own tail

As the external issues rage on, there’s another problem looming large over the industry; generative AI seems to be getting dumber. Recent reports suggest that due to the AI system's use of prompts, it’s getting worse at solving some very basic problems.

This is the challenge with generative AI; everything rests on the quality of the training data. If the training data needed to stay relevant, is increasingly AI-generated, and if the user prompts play a role in dumbing down the models, then we may be in a catch-22 situation with generative AI – damned if you use it, damned if you don’t.

This is perhaps the biggest problem with generative AI: the more it’s used, the less reliable its training data becomes. How do you solve a problem like that? There are no reasonable solutions yet to the biggest existential threat to the category.

There is also a bigger problem with the increased scope of data collection, with companies wanting to train and deploy their own AI models. Zoom has caught mainstream media attention for a small clause change in its privacy policy about using customer data to train the video conferencing app’s proprietary AI models. The T&C changes appear not to be opt-out – meaning consumers will have to share their product usage data to train the company’s AI products.

Google also recently changed its privacy policy across all products, which will now allow the company to use publicly posted data on Google properties to train a variety of generative AI products.

There’s still so much grey space in this kind of data collection, and we lack a taxonomy to establish clear rules. Even though the data, in the aggregate, is used to train an AI system, it doesn’t necessarily mean that the data is stored long term, only used to refine an AI system.

So, is it data collection? Or internal use to improve products and services? No one has a clear answer as to what to do with the even greater incentives to collect customer data and to feed the black box. Across the dumbing down of the AI tool and the increased data collection, it seems like there’s a very big risk that the entire space might eat itself.

Both the privacy angle and the doom-loop scenario of LLMs increasingly having to train on their own output are unavoidable challenges if generative AI companies want to improve a good-but-not-quite-right product into something that consumers can rely on. That is if they even know what this technology is.

What’s ChatGPT?

Clearly, there’s a lot of spaghetti thrown at the wall and some very real challenges. But we need more data until we see what sticks and what gets solved. What we do know, so far, is that most marketers are not regularly using the technology. Despite marketing and sales departments being the highest share of all business departments using generative AI regularly, according to a new McKinsey study, only 14% of them actually do.

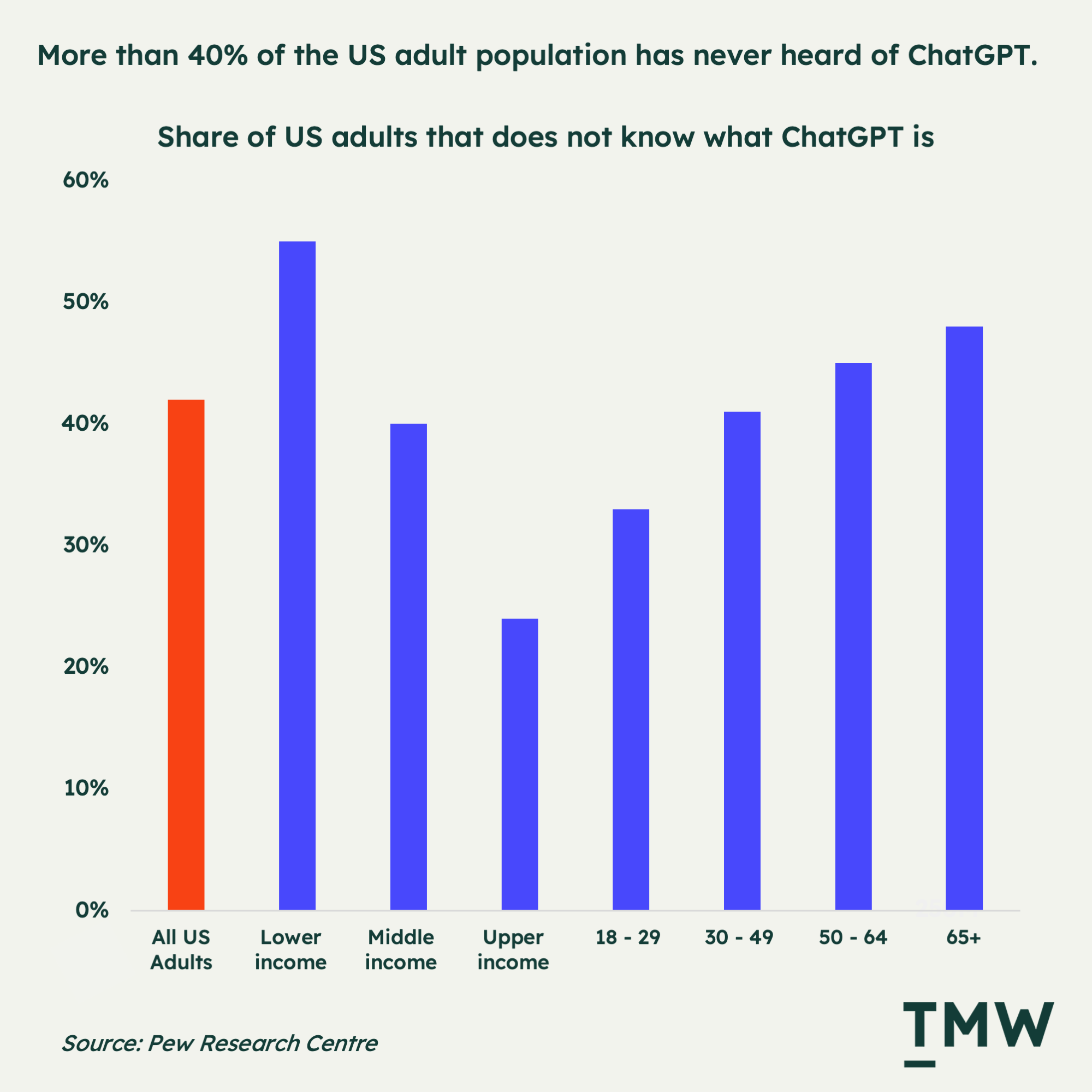

From a consumer perspective, it’s still very early for the category. Multiple reports such as the Pew Research Center suggest that around 40% of Americans still haven’t heard of ChatGPT, and only 14% have actually tried it. This number is even higher for lower-income groups and those over 50, which form a large part of the US economy.

A lot of people are yet to learn about the technology, which is an opportunity to build more consumer-friendly tools. Generative AI is still a domain of tech, and like mobile and the internet itself, it can take a decade for it to become a mainstream technology paradigm. All of the real opportunity is in getting regular people to use it. But eventually, it won’t be called ChatGPT, or Midjourney, or BARD. It will have other names and work as a substrate for a lot of other channels in the same way that consumers don’t care about ISPs and cell phone towers or GSM networks of SMTP. It’s all just necessary background.

Even Microsoft’s new Bing and Google’s announcement of “Generative Search” are a step in this direction. The mass consumer opportunity is not in professional services but in search products, marketplaces, content, and the boring stuff of enterprise operations and data.

A long walk into the future

The innovation trigger has arrived, and there are plenty of problems to work on. But the real question for any marketer worth their salt is: does it lead to any commercial value?

OpenAI is projected to be making $1 billion in revenue by 2024, which is impressive, but a drop in the bucket compared to Google, Meta, and even Amazon’s ad businesses – all companies that have already harnessed algorithms for mass consumer experiences and channels. The jury is still out on the venture opportunity in AI - the only thing that’s supporting the entire industry’s growth and experimentation right now. There are a lot of strong points in Gary Marcus’ conclusions that generative AI could turn out to be a dud.

In my view, generative AI is something marketers will be – by-and-large – reacting to rather than harnessing. It’s not a tool, but a reconfiguring force with the potential to change the incentives, information pathways, and even our perception of reality on the web.

But it will take a while. Generative AI could equally become a self-destructive technology instead of a self-reinforcing technology like search and social algorithms. And it might just end up eating itself.

And while the entrepreneurial energy in building generative AI startups is happening at a fast clip and attracting billions in fresh funding, in reality, companies move slowly. And the ones that have all the capital to deploy into generative AI projects – the enterprises – are the slowest of them all.

The last big paradigm shift, which was the smartphone, took 6 to 7 years to really start attracting marketing and digital folks that were able and willing to build on top of Android and iPhone. It won’t be any different here – the hype is fizzling out – but the long tail will be a decade of small incremental iterations; that is if the problems of IP rights, misinformation, and the technology’s very real self-destructive loop can be solved.

But like my kid’s crazy craft shelf, marketers need to explore, create and try these new tools. But we also must be just as willing to throw them away if our ethical, commercial, or creative interests are no longer aligned with generative AI. Like any new technology shift, there’s a lot of waste, half-done projects, and well-meaning intentions. And sometimes it all goes to zero and everything goes into the recycling bin.

Stay Curious,

Make sense of marketing technology.

Sign up now to get TMW delivered to your inbox every Sunday evening plus an invite to the slack community.

Want to share something interesting or be featured in The Martech Weekly? Drop me a line at juan@themartechweekly.com.